Configuring Virtual Networking (PVE)

Overview

The platform's data and application node VMs ("the platform nodes") are connected through three networks:- Management network — used for user access to the platform dashboard and for direct access the platform nodes.

- Data-path (client) network — used for internal communication among the data and application nodes.

- Interconnect network — used for internal communication among the data nodes.

To allow proper communication, you need to create and map network bridges for these networks, as outlined in this guide. Repeat the procedure for each Proxmox VE hypervisor host machine ("PVE host") in your platform's PVE cluster.

Prerequisites

Before you begin, ensure that you have administrative access to the platform's PVE cluster, and that each PVE host in the cluster has the following network interfaces:

- A single-port 1 Gb (minimum) NIC for the management network

- For hosting data-node VMs — a dual-port 10 Gb (minimum) NIC for the data-path (client) and interconnect networks

- For hosting application-node VMs only — a single-port 10 Gb (minimum) NIC for the data-path (client) network

Configuring the Management Network

The management network is usually connected to the default existing bridge "vmbr0", and is mapped to the management port.

It's recommended that you add a comment marking this interface as "management":

in the PVE GUI, choose the relevant PVE host, select

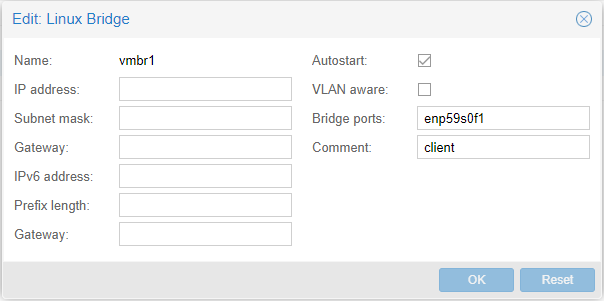

Configuring the Data-Path (Client) Network

To configure the data-path (client) network, in the PVE GUI, choose the relevant PVE host and select

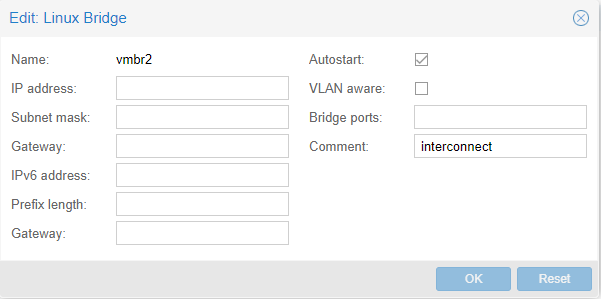

Configuring the Interconnect Network

To configure the interconnect network, in the PVE GUI, choose the relevant PVE host and select

Restarting the PVE Host

When you're done, restart the PVE hypervisor host machine ("the PVE host") to apply your changes.

Verifying the Configuration

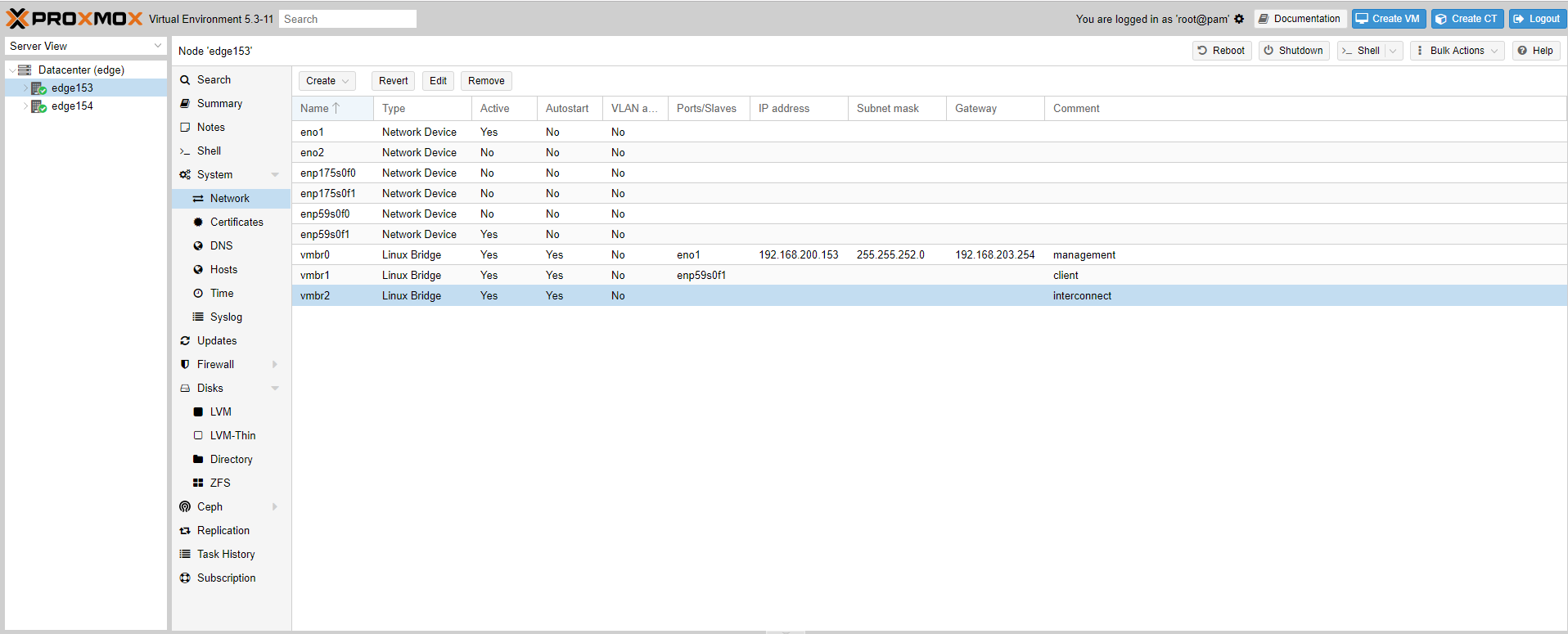

When you're done (after the host has restarted), you can verify your configuration in the PVE GUI, as demonstrated in the following image; (the IP addresses and interface names might be different in your configuration):