Let's Discuss Your AI Use Case

Accelerate the deployment and management of your AI applications with the Iguazio MLOps Platform

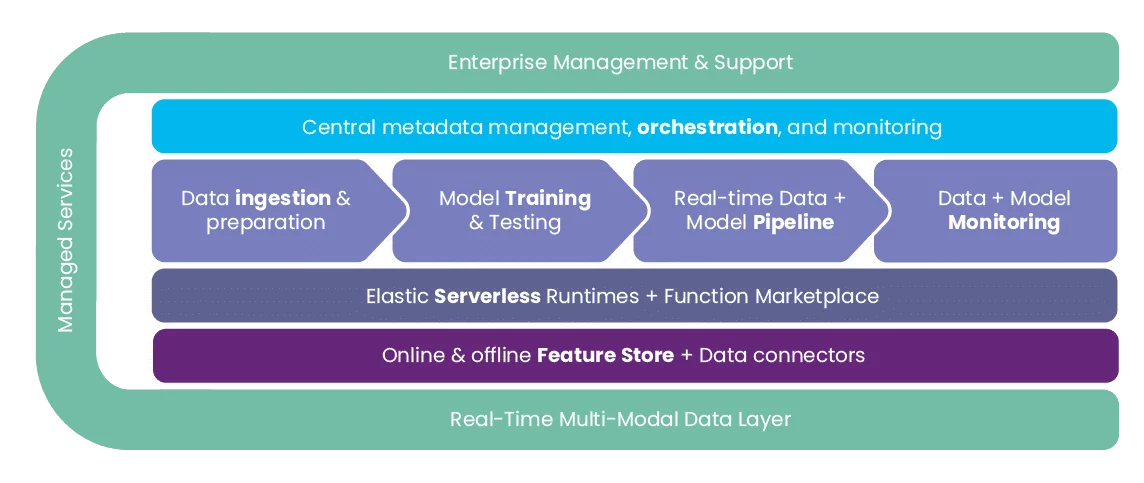

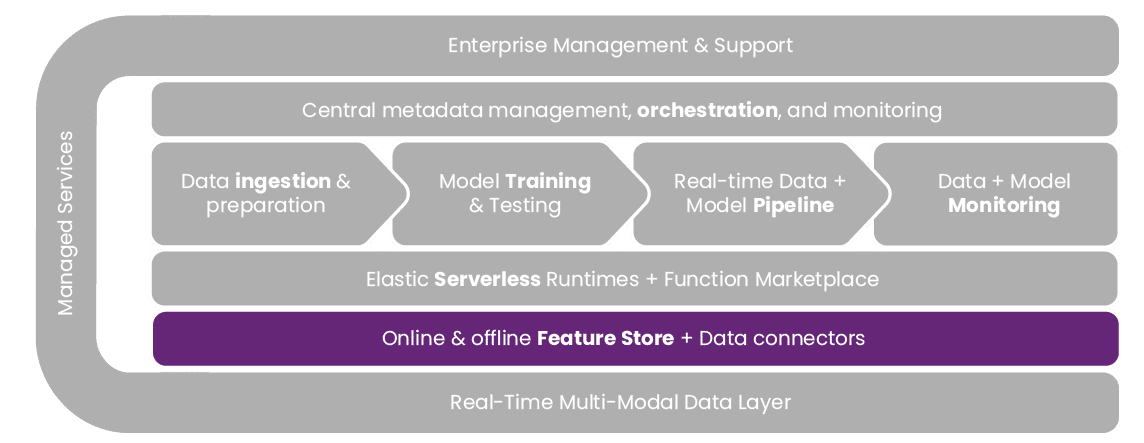

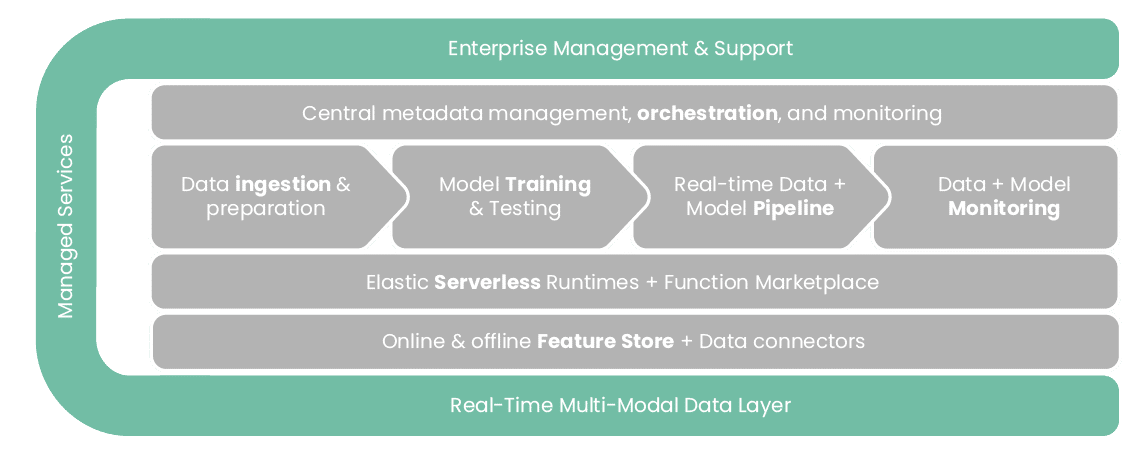

The Iguazio MLOps Platform enables you to develop, deploy and manage real-time AI applications at scale. It provides data science, data engineering and DevOps teams with one platform to operationalize machine learning and rapidly deploy operational ML pipelines with a CI/CD approach. The platform includes an online and offline feature store, fully integrated with automated model monitoring, drift detection, and auto re-training, model serving and dynamic scaling capabilities, all packaged in an open and managed platform.

Enable CI/CD for ML, automating the entire ML pipeline from data collection preparation and training to rapid deployment and ongoing monitoring in production. Manage your ML pipeline end-to-end using a full-stack, user-friendly environment, featuring a fully integrated feature store and powerful data transformation and real-time feature engineering capabilities, ML lifecycle orchestration and automated real-time ML pipelines.

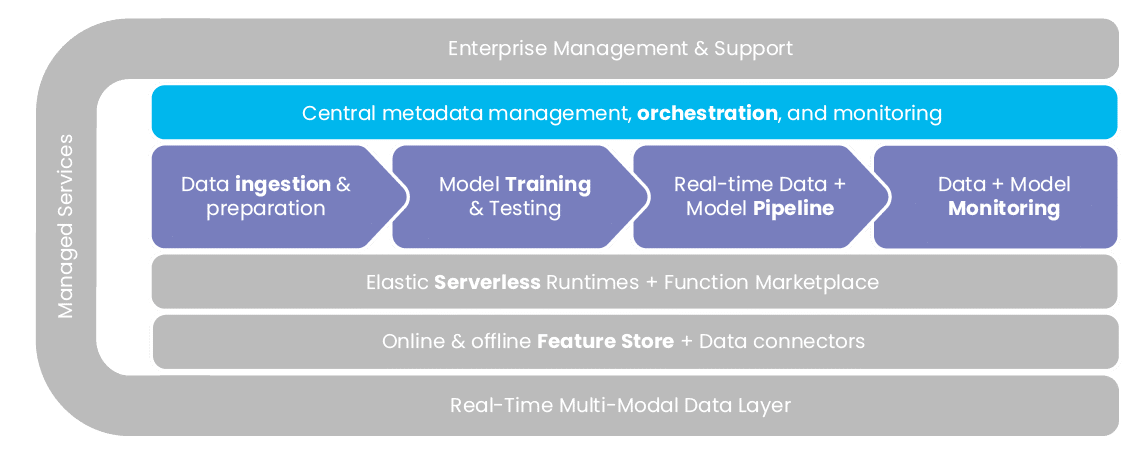

Central Metadata Management

Scaling up your organization’s AI infrastructure is vital to meet business goals and accelerate time-to-market. The Iguazio platform enables ML teams to manage all artifacts throughout their lifecycle, with automatic management of the data and model versioning, as well as the record of all experiment information as part of the model training. With baked-in lifecycle management, the whole team can easily compare and reproduce experiments, gain visibility into model governance, and avoid the complexity of implementing custom logic to manage those artifacts. With the Iguazio MLOps Platform, data engineers, data scientists and MLOps engineers work in a unified environment with processes that increase productivity right out of the box.

Iguazio provides a generic and and easy to use mechanism to describe and track code, metadata, inputs and outputs of machine learning related tasks (executions). Users track various elements, store them in a database and presents all running jobs as well as historical jobs in a single report.

MLOps Orchestration

With the Iguazio MLOps Platform, data engineers, data scientists and MLOps engineers work in a unified environment with processes that increase productivity right out of the box. Iguazio is natively integrated with Kubeflow Pipelines to compose, deploy and manage end-to-end machine learning workflows with UI and a set of services. To enable scalability, KubeFlow Pipelines works with Iguazio’s MLRun, orchestrating various horizontal-scaling and GPU accelerated data and ML frameworks. A single logical pipeline step may run on a dozen parallel instances of TensorFlow, Spark, or Nuclio (Iguazio’s serverless functions).

Users run multiple experiments in parallel, each using a different combination of algorithm functions and/or parameter sets (hyper-parameters) to automatically select the best result. By running AutoML over parallel functions (microservices) and data, complex tasks run at a fraction of the time with fewer resources and the selected implementation is deployed to production in one click.

Data Ingestion & Preparation

Nuclio, Iguazio’s open-source serverless framework, was built with machine learning pipelines in mind and tackles ingestion with the following capabilities:

Model Training & Testing

With Iguazio, customers still benefit from serverless advantages such as on-demand resource utilization, auto-scaling and automation even for data intensive and batch oriented tasks. Different engines are wrapped around open source tools such as Spark, Tensorflow, Horovod and Nuclio to handle scalability and parallelism. These operate over Iguazio’s real-time data layer and under an abstraction layer eliminating operational tasks like building, monitoring and artifact tracking, providing the ability to code once and run on different run-times with 2 lines of code.

Real-time Data & Model Serving Pipeline

Iguazio’s serverless framework is built from the ground up to support low-latency real-time data processing. The model can be triggered using different streaming engines without the need to write additional code. Similarly, data pre-processing and transformation processes can take place using the same framework and tools, while connecting those different functions can be done as easily as passing parameters to functions. Because the same building blocks are used throughout the pipeline, it is simple to change the pipeline over time while ensuring that each component in the pipeline remains fully scalable.

Nuclio works with virtually any type of event trigger. Users code in a Jupyter notebook and with a simple click convert it to a deployable function. The result is better use of resources on demand as models can scale up and down as needed. Nuclio provides optimized utilization of GPUs and CPUs by using half the amount of resources while achieving better performance.

Data & Model Monitoring, Drift Detection & Auto-Retraining

Model performance monitoring is a crucial element of your production environment. Models may encounter data that is substantially different than the training data, either because of limitations in the training data or from changes occurring in the live data, making previous data obsolete. The Iguazio MLOps Platform detects this drift on the feature level and monitors the model outcome, sends an alert when performance degrades and triggers automatic retraining. The entire data pipeline is fully managed and monitored on Iguazio.

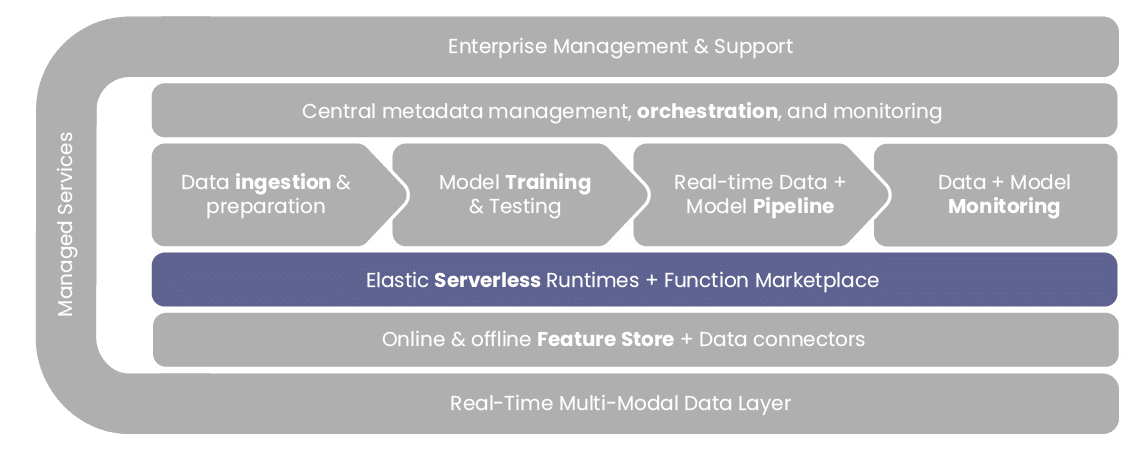

Data science teams waste months and resources on infrastructure tasks involving data collection, packaging, scaling, tuning and instrumentation. Iguazio’s serverless engines automate MLOps to deploy projects in a week as opposed to months.

Serverless enables developers to write code which automatically transforms to auto-scaling production workloads, significantly cutting time to market and reducing resources. While serverless typically tackles only stateless and event driven workloads, with Nuclio, Iguazio’s open source serverless framework, it automates every step of the machine learning pipeline, including packaging, scaling, tuning, instrumentation and continuous delivery. The shift to microservices enables collaboration and code re-use, gradually tuning functions without breaking pipelines and consuming the right amount of CPUs, GPUs and memory resources. Nuclio delivers out-of-the-box production readiness by automatically managing API security, rolling upgrades, A/B testing, logging and monitoring

Data in the modern digital world comes in many different forms and shapes: it can be structured or unstructured; arrive in streams; or be stored in records or files. This no longer fits the traditional data warehouse or data lake approach.

Feature Store

The Iguazio feature store provides a single pane of glass for engineering, storing, analyzing and sharing all available features, along with their metadata and statistics. The feature store abstracts away all the engineering layers and provides data scientists with easy access for reading and writing features.

One of the biggest data science challenges is maintaining the same set of features in the training and inferencing stages, especially when it comes to real-time as opposed to batch processing, and using the same features across the organization.

Iguazio provides a unified feature store that acts as a real-time data transformation service. Datasets are registered, ingested/imported and transformed for training and batch processing, leveraging various managed services (Spark, Dask, Presto, Nuclio, etc.), as part of a production-ready real-time feature engineering service. The Iguazio feature store uses a single logic for generating features for training and serving, so ML teams can build features once and then use them for both offline training and online serving.

Enriched features are accessed through low-latency real-time key/value or time-series APIs. The platform reaches the performance of memory with the scale and lower costs of SSD/Flash, eliminating the need for separate in-memory databases and constant synchronization between different online (real-time) and offline feature stores.

Iguazio provides fast, secure and shared access to real-time and historical data including NoSQL, SQL, time series and files. It runs as fast as in-memory databases on Flash memory, enabling lower costs and higher density.

Enterprise Management and Support

Iguazio’s MLOps Platform is delivered as an integrated offering with enterprise resiliency and functionality in mind. IT operators do not need to create automation scripts and have tight management throughout the day. Instead, they set up the system through wizards, configure administration policies and register for system notifications.

Iguazio’s team of world-class engineers offers expertise, guidance, consultations, and assistance at every stage of your ML journey, with 24/7 enterprise support, service and performance monitoring and a team of seasoned customer success engineers to help you meet your unique requirements.

Managed Services

With fully managed services, ML teams can abstract away much of the complexity of bringing ML to production while enabling a high-performing, scalable and secure platform which can be deployed in any cloud or on-prem.

The Iguazio MLOps Platform is a fully integrated solution with a user-friendly portal, APIs and automation. Services for scalable data and feature engineering, model management, production/real-time pipelines, versioned and managed data repositories, managed runtimes and authentication/security are available out of the box.

MLRun, Iguazio’s open-source framework, controls the entire functionality from data ingestion to production pipelines and it delivers a comprehensive Web UI, CLI and API/SDK.

With MLRun, users can work through the SDK and IDE plug-ins from anywhere using their native IDE (Jupyter, PyCharm, VSCode, etc.), there’s no need to run on the Kubernetes cluster, and individual tasks or complete batch and real-time pipelines can be run with a few simple commands.

Execution, experiment, data, model tracking and automated deployment is done automatically through MLRun serverless runtime engines. MLRun maintains a project hierarchy with strict membership and cross team collaboration.

End-to-end data governance is fully solidified and managed, with authentication and identity management, RBAC, data security, secrets management and integration with other security services.

Customers securely share data by providing access directly to it and not to copies. The same data is always accessed, but different users are exposed to different elements of it, according to predefined rules. Granular security is only possible if data has structure and metadata and when identity and security are enforced end to end. The Iguazio real-time data layer classifies data transactions with a built-in, data firewall that provides fine-grained policies to control access, service levels, multi-tenancy and data life cycles. Organizations can enable data collaboration and governance across apps and business units without compromising security or performance.

Real-time Multi Modal Data Layer

Iguazio’s real-time data layer supports simultaneous, consistent and high-performance access through multiple industry standard APIs. Users can ingest any type of data using a variety of protocols and concurrently read the data using a different method. For example, one application can ingest an event stream while another application reads that data as a table or file.

Iguazio built its solution from the ground-up to maximize CPU utilization and leverage the benefits of non-volatile memory, 100GbE RDMA, flash and dense storage, achieving extreme performance with consistency at the lowest cost. It shifts the balance from underutilized systems and inefficient code to extremely parallel, real-time and resource optimized implementation requiring fewer servers.

Automated offline & online feature engineering for real-time and batch data

Rapid development of scalable data and ML pipelines using real-time serverless technology

Codeless data & model monitoring, drift detection & automated re-training

Integrated CI/CD across code, data and models, using mainstream ML, Git & CI/CD Frameworks