Discover how GenAI-powered agent co-pilots can boost call center performance by surfacing upsell opportunities in real time and guiding agents with personalized insights.

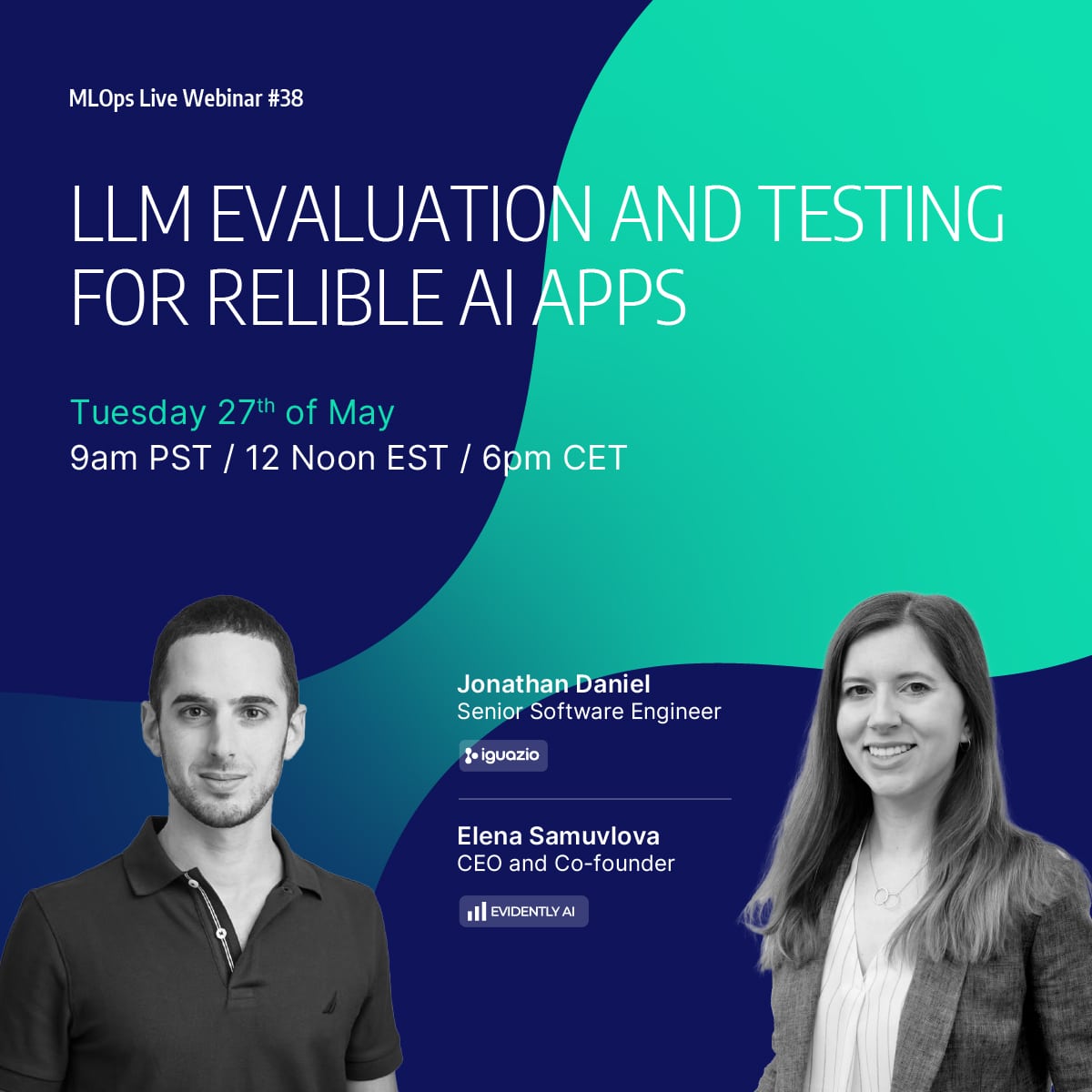

Join our webinar to explore the critical role of evaluation in building and scaling LLMs, featuring Evidently AI!

Gain actionable insights into enhancing LLM observability and performance. Here's what you can expect to learn:

1.The importance of evaluation for reliable and confident LLM production.

2.Real-world risks like hallucinations and system failures.

3. Techniques for testing, monitoring, and improving LLM performance.

4. Strategies to integrate evaluation seamlessly into your workflows.

This session will equip you with the tools to optimize your LLMs effectively. Don’t miss it!