Introducing MLRun v1.10: New tools for building agents and monitoring gen AI

Gilad Shaham | December 2, 2025

MLRun v1.10, the latest version of our open source AI orchestration framework, is available today to all users.

Iguazio started out as a platform to operationalize enterprise machine learning projects. Though we’ve been through quite a few waves of AI in just a short time, the underlying challenges are the same: getting from experimentation to production remains a major blocker. Whether the use case leverages a deterministic model or the latest frontier model at the top of the leaderboard, enterprise teams use Iguazio to automate, accelerate and scale (gen) AI build, deployment and management. Now that the agentic era is upon us, we’re tackling the novel challenges of production-grade agentic AI systems.

In the agentic organization, monitoring is non-negotiable. Passive LLMs introduced novel risks to the organization, that require a rethinking of how AI systems handle governance. Now that pioneering teams are deploying autonomous proactive AI systems, monitoring systems are paramount.

This release includes three key categories of improvements:

Build Better Agents

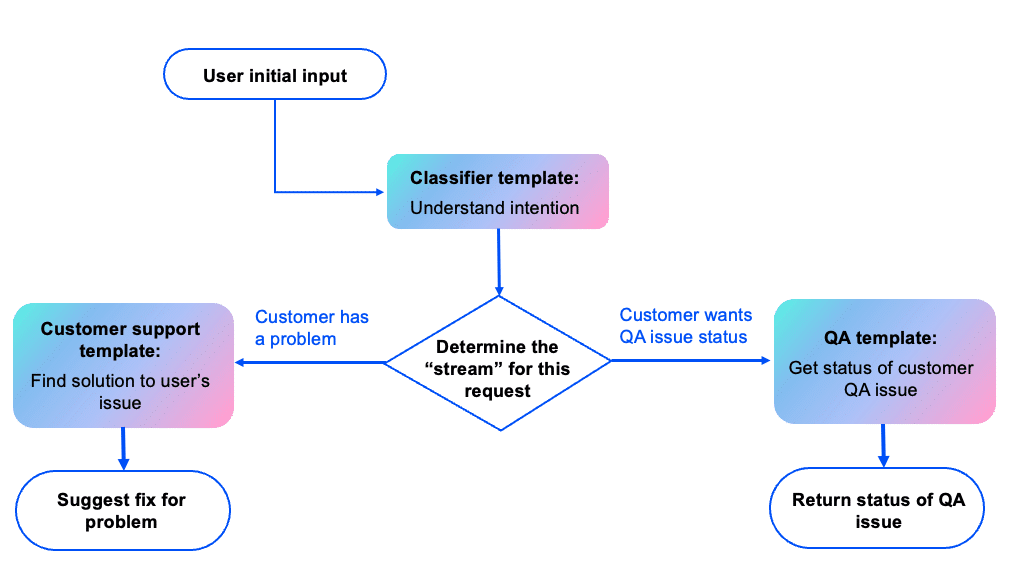

Until now, deploying agentic AI meant fragmented workflows and manual optimizations. This complexity translated into weeks of extra work required to launch agents. In v1.10 we’re launching features that address the unique requirements of agent development, making it easier to experiment, reuse, swap, deploy and iterate, without breaking the workflow.

The LLM Prompt Artifact turns prompt engineering into a structured, repeatable process, making it easier to build, test, and deploy agents that work.

The Future of Monitoring for Gen AI

We are continuing to evolve monitoring in MLRun to reflect the unique requirements of enterprise AI governance. A comprehensive generative AI monitoring system must be capable of applying multiple methods to understand where problems exist. MLRun’s open architecture gives you the flexibility to use multiple solutions and easily swap them out. In this release we built a unified dashboard that consolidates all your monitoring apps into one place. This view lets you track which endpoints have detected issues, drill down into app-level metrics, inspect shard-level performance and much more.

Enhanced LLM Inference Capabilities

MLRun v1.10 introduces improved LLM inference capabilities, enabling seamless integration of locally hosted and remote models into an inference graph. This enhancement allows users to call and orchestrate multiple models—whether hosted on-premises or in the cloud—within a single workflow. By streamlining the inference process, teams can build more robust and scalable AI pipelines that leverage the best of both local and remote resources.

For complete technical details, read the technical blog on the MLRun site and check out the documentation.