Operationalizing Data Science

Adi Hirschtein | April 8, 2019

Imagine a system where one can easily develop a machine learning model, click on some magic button and run the code in production without any heavy lifting from data engineers...

Why? Because the market is currently struggling with the entire data-science-to-production pipeline. We’ve seen lots of cases where data scientists work on their laptops with just a subset of data, using their own tools which are typically very different from production tools. The result is a long delay from the moment their model is ready to the point it actually runs.

Shifting the Paradigm

We at Iguazio believe that the current paradigm is broken - there must be an easier way, based on the following principles:

- Data scientists work on datasets that are similar to the ones in production, with minimal deviation. This means that their behavior in training and in production is the same.

- Data is not moved around or duplicated just for the sake of building or training a model.

- The transition from training to inferencing is smooth: once a prediction pipeline (model and ETL) is created, it doesn’t require any further development effort in order to work in production.

- Updating models is automatic without requiring human interference.

- Models are validated automatically as an ongoing process and new models are automatically transferred to production.

- The environment supports languages and frameworks that are popular with data scientist while at the same time enables popular analytics framework for data exploration.

- Data scientist are able to collaborate and share notebooks in a secured environment, making sure users view data securely, based on their individual permission.

- GPU resources are easily shared and used by data science teams without DevOps overhead.

The Solution

Let’s take a look at what Iguazio does with each of the four steps in the data science life cycle: data collection, data exploration, modeling and deployment.

Data Collection

Before doing anything, we need the data… sounds simple but even that basic step is often challenging. Why? Because in some cases data is collected from various systems that are working with different protocols and bringing them together in real-time is a challenging task in and of itself. Not to mention the fact that there is often a need to merge, transform and cleanse data before saving it to a centralized data store.

Iguazio has a serverless functions framework which allows developers to build and run auto-scaling applications without worrying about managing servers. Nuclio provides a broad set of collectors that makes it extremely easy to collect data from various sources, merge streaming on the fly, transform data and store it in different formats on its data services. Iguazio also supports a native time series format as well as a key value structure. This provides lots of flexibility when storing different data types.

We’ve seen that many workloads are time series by nature and storing them in a simple key value or relational structure is far from being efficient. Iguazio’s built-in time series structure makes it optimal for those use cases (more details in an upcoming post about time series).

Exploration

Exploration is meant for us to try and understand what patterns and values our data has. The idea here is to start deriving hidden meanings and gaining a sense of which model might be suitable for our purposes. At the end of this process we extract features and test them until we have something in place.

The key point here is that with Iguazio, users work on a central platform. Data scientists open up a Jupyter notebook from Iguazio’s platform and start working on any data set that resides within the platform. There is no need for a local copy. You can work with popular data science tools such as Pandas and R, as well as analytics frameworks such as Spark and even Presto for exploration. Since all these frameworks are built into Iguazio, our users have an out-of-the-box comprehensive analytics and distributed environment. Other solutions require spawning up an EMR cluster for Spark, or using SQL services with additional tools and working with Jupyter in a different data set. Iguazio enables all these popular tools in one place, on the same data set.

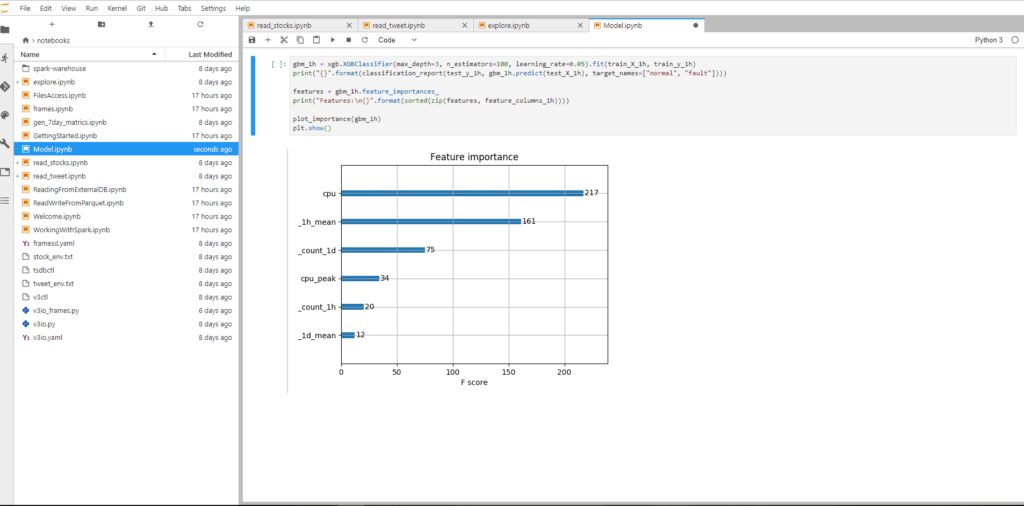

Modeling

Now that we have the clean dataset, we run all kinds of analysis and machine learning algorithms on it. After a few iterations, we decide which algorithm best fits our needs and which feature vector is right for our model. We often use a prediction algorithm to enhance our business decision making. The model can be developed in Python using a Jupyter notebook, validated against a real-time production dataset.

Deployment

Iguazio takes the same Python code and transfers it to a Nuclio function by running a few lines of code.

We create a Nuclio function with its relevant dependencies and environment variables while passing the same parameters that were used in the training notebook. The function is deployed with all its relevant environment variables and annotations and then triggered as part of the production pipeline. There is no need for any data engineering or code changes.

In most cases the function reads data in production from the same table that was used for the training process (stored as key value or a time series table within Iguazio). The nature of Nuclio enables running the model as distributed and in large scale.

Check out this link for detailed information about the Jupyter Nuclio integration https://github.com/nuclio/nuclio-jupyter. Note that Nuclio can run models that require GPU resources in a very efficient way, as it is the first serverless framework in the market to support GPUs.

Summary

Change the paradigm and think through the entire pipeline as a whole, ensuring you've got all the building blocks of analytics and data services in place, alongside the proper orchestration for exploration, training and eventually deployment for running models in production. Writing machine learning and artificial intelligent apps doesn’t have to be as complicated as it's been so far.

Now go and play...!

Adi Hirschtein

Iguazio's Director of Product Management