The Spark Service

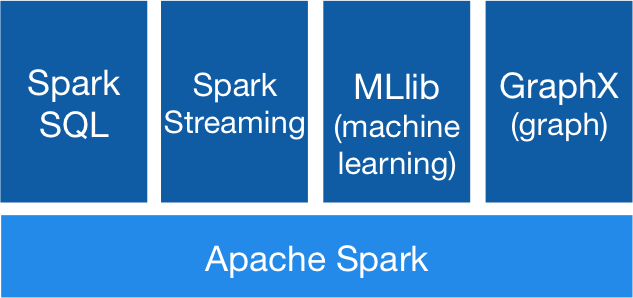

The platform is integrated with the Apache Spark data engine for large-scale data processing, which is available as a user application service. You can use Spark together with other platform services to run SQL queries, stream data, and perform complex data analysis — both on data that is stored in the platform's data store and on external data sources such as RDBMSs or traditional Hadoop "data lakes". The support for Spark is powered by a stack of Spark libraries that include Spark SQL and DataFrames for working with structured data, Spark Streaming for streaming data, MLlib for machine learning, and GraphX for graphs and graph-parallel computation. You can combine these libraries seamlessly in the same application.

Spark is fully optimized when running on top of the platform's data services, including data filtering as close as possible to the source by implementing predicate pushdown and column-pruning in the processing engine. Predicate pushdown and column pruning can optimize your query, for example, by filtering data before it is transferred over the network, filtering data before loading it into memory, or skipping reading entire files or chunks of files.

The platform supports the standard Spark Dataset and DataFrame APIs in Scala, Java, Python, and R. In addition, it extends and enriches these APIs via the Iguazio Spark connector, which features a custom NoSQL data source that enables reading and writing data in the platform's NoSQL store using Spark DataFrames — including support for table partitioning, data pruning and filtering (predicate pushdown), performing "replace" mode and conditional updates, defining and updating counter table attributes (columns), and performing optimized range scans. The platform also supports the Spark Streaming API. For more information, see the Spark APIs reference.

You can run Spark jobs on your platform cluster from a Jupyter web notebook; for details, see Running Spark Jobs from a Web Notebook.

You can also run Spark jobs by executing

You can also use the Spark SQL and DataFrames API to run Spark over a Java database connectivity (JDBC) connector.

spark variable) and stop the session at the end of the flow to release resources (for example, by calling spark.stop()).